Our main goal is to build off of existing GS-to-mesh reconstruction algorithms and attempt to improve them by seamlessly incorporating VLM’s by selectively 3D cropping objects, the gaussians via improved volumetric GS meshing algorithms. Optionally, we want to infer color information to provide material texture to the final mesh, and utilize 3D diffusion pipelines to repair the extracted gaussian to have better volumetric meshing results. To view our results, we will also make a Windows application to rasterize the reconstructed mesh alongside the original camera frustums used to generate the GS. If time permits we will try to tie together a full pipeline for generating the GS from phone video, then extracting out desired objects, filling in holes, and finally reconstructing and rendering the mesh.

Challenge and Motivation: 3D Gaussian Splatting (3DGS) has emerged as a powerful and efficient learning-based reconstruction algorithm. However, despite its impressive rendering quality and performance, 3DGS is still far from being practical for downstream applications. Specifically, current 3DGS methods face the following key challenges:

1. Incompatibility with traditional content pipelines: Gaussian splats are not easily editable. Unlike traditional 3D assets with meshes, textures, and materials, GS representations cannot be easily lighted or edited. This severely limits their utility in production environments.

2. Object Isolation and Reusability: 3DGS methods typically reconstruct entire scenes holistically. However, in many practical use cases, creators only need specific objects from a scene. Current GS representations make it difficult to extract reusable, portable 3D objects.

3. Incomplete geometry due to limited observations: Because 3DGS relies on image inputs, it fails to reconstruct parts of the scene that are not visible during capture (e.g., the bottom of a cu). This leads to incomplete objects, further limiting their usability.

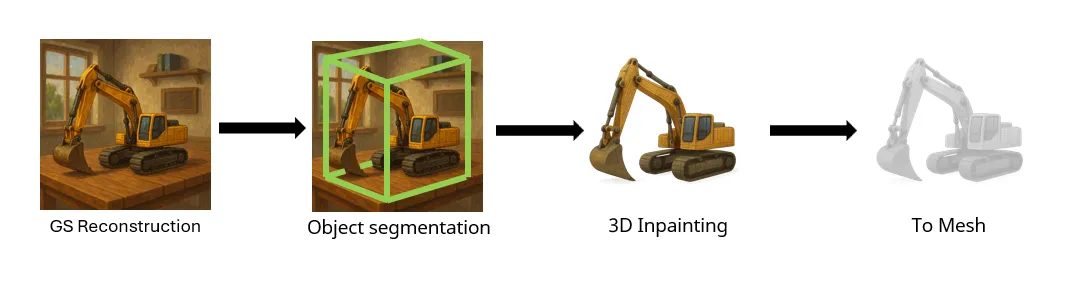

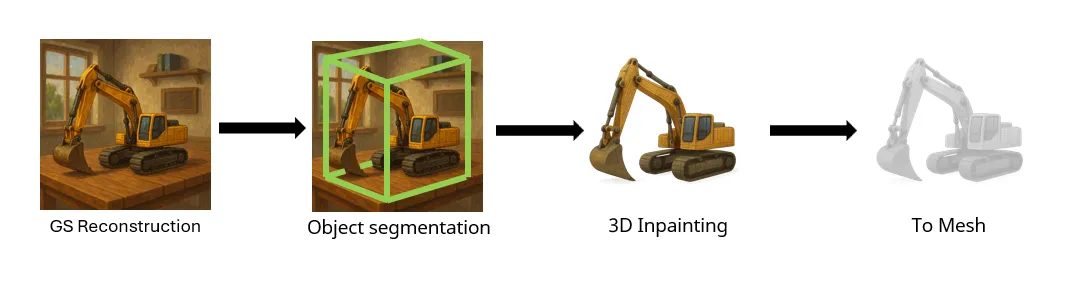

To address these limitations, we propose a pipeline that selects points from a 3DGS scene based on text prompts and then transforms them into a clean, complete (textured) mesh. The proposed pipeline consists of four stages:

1. Scene reconstruction using existing 3DGS algorithm and multi-view image inputs.

2. Object segmentation via vision-language models (VLMs) to locate a target object given a text prompt, and extract its bounding region from the GS scene.

3. [Optional] 3D inpainting using a diffusion model trained for 3DGS completion, to fill in missing geometry and obtain a complete object representation.

4. Mesh reconstruction by estimating multi-view depth from the rendered views of the object. We can do the following adaptations: Since GS2Mesh [4] uses depth and occlusion estimations, they usually ignores local features of mesh surfaces (like the effect of displacement mapping); We can adapt Depth Fusion in GS2Mesh (A TSDF algorithm conditioned on predicted stereo depth) with SuGaR’s mesh constraint or IsoOctree to capture local information; Also, this method may solve the problem of reconstructed holes when encountered with reflective textures (like windows), since the point clouds themselves are continuous.

5. Visualization by rendering the output mesh in a Windows application, having an option to show the camera poses used to create the initial gaussian splat.

In this project, we will mainly focus on the fourth part, Mesh Reconstruction. The most of engineering points will be here.

Our project can be split into two components. The first focuses on getting a clean and complete object-level 3D Gaussian Splatting representation from image inputs and a text-based object query. The second focuses on converting the 3DGS object into a usable 3D mesh. For this project, we will prioritize the second component, focusing on mesh reconstruction from 3D Gaussian representations, while aiming to extend toward the full pipeline if time permits.

As our baseline goal, we will focus on converting object-level 3DGS representations into meshes using existing datasets. Several methods such as GS2Mesh and SuGaR have demonstrated that this task is feasible. Our primary deliverables for this stage are:

1. A working pipeline for GS-to-mesh conversion, based on the GS2Mesh framework.

2. An improved mesh reconstruction algorithm by upgrading the depth fusion module. We aim to utilize SOTA monocular depth predictor networks, paired with an improved adaptive TSDF implementation.

3. Making a windows application to render the completed mesh that allows for camera movement and displaying the camera frustums from the original images. We will make this GUI in c++ using the windows api, and will rasterize the mesh similarly to homework 1, also adding shading and movable light sources. One of our group members has experience with the windows api and will focus on this task.

If we make strong progress and stay ahead of schedule, we aim to implement the full object-level extraction and mesh reconstruction pipeline from raw image inputs and text prompts. This pipeline includes:

1. Using an off-the-shelf 3DGS reconstruction method (e.g., the mobile apps) to generate a scene-level 3DGS.

2. Applying a diffusion-based inpainting model to fill in missing geometry and complete the object’s 3DGS.

3. Finally, feeding the completed GS into our mesh reconstruction module to produce a usable mesh asset.

Another improvement if time permits is to add volumetric rendering to the windows app to be able to switch between viewing the gaussian splats and the reconstructed mesh to make direct comparisons much easier. For this we may need to use gpu hardware acceleration through directX11.

Many of the required components have existing implementations or pretrained models, making this a plausible goal.

1. Quantitative evaluation. Following SuGaR and GS2Mesh, we plan to use metrics such as PSNR, SSIM and LPIPS to assess mesh quality compared to ground truth and mesh generated by other methods.

2. Qualitative analysis by rendering and visual inspection of the reconstructed meshes.

These deliverables are achievable with available tools and datasets, and will form the core evaluation criteria for the success of this project.

Week 1: Create a plan for our mesh reconstruction algorithm. Start with adaptive volumetric TSDF algorithm, then add iso-octrees to improve mesh fidelity [7]. Begin working on the visualizer app with viser-style camera frustums.

Week 2: Try to finish mesh reconstruction and the visualizer.

Week 3: Optionally implement diffusion-based PLY infilling to fill in missing geometry. Setup Structure from motion -> 3DGS -> density pruning to reduce filters and unnecessary scaled gaussians to feed into our improved meshing algorithm. One of our team members already has the pipeline to create the initial gaussian splat from another project.

Week 4: Create the infrastructure necessary to automatically route from start to end. Finishing touches on the visualizer. Qualitatively and quantitatively compare our results to other mesh reconstruction papers, and write our report.

Our work mainly builds on GS2Mesh’s github repo.

1. Most listed papers require a single RTX 3090 (24 GB Memory, 384-bit interface) level computational platform. None of them require multi-card training.

2. We can either run projects locally or use online servers like Cloud GPUs (Graphics Processing Units) | Google Cloud | Google Cloud.

1. Environments: CUDA 11.8 + Conda

2. Libraries/Tools: pytorch, open3d, colmap, windows api, possibly directX11

1. DTU (Very traditional MVS dataset of single objects)

2. Nerf Dataset (traditional lego, barn, …; combination of llff/)

3. Mipnerf/Mipnerf-360 Dataset (Slightly larger scenes like bonsai)

1. [2311.12775] SuGaR: Surface-Aligned Gaussian Splatting for Efficient 3D Mesh Reconstruction and High-Quality Mesh Rendering

2. [2403.17822] DN-Splatter: Depth and Normal Priors for Gaussian Splatting and Meshing

3. [2403.17888] 2D Gaussian Splatting for Geometrically Accurate Radiance Fields

4. [2404.01810] GS2Mesh: Surface Reconstruction from Gaussian Splatting via Novel Stereo Views

5. [2411.19271] AGS-Mesh: Adaptive Gaussian Splatting and Meshing with Geometric Priors for Indoor Room Reconstruction Using Smartphones

6. Marching cubes: A high resolution 3D surface construction algorithm | ACM SIGGRAPH Computer Graphics

7. Unconstrained Isosurface Extraction on Arbitrary Octrees